by Andrew Zolli | 8:00 AM September 26, 2012

|

| Air France A330-200 F-GZCP lands at Paris-Charles de Gaulle Airport. – The aircraft was destroyed in Air France Flight 447. (Photo credit: Wikipedia) |

What — or who — was to blame? French investigators … singled out one all-too-common culprit: human error. Their report found that the pilots, although well-trained, had fatally misdiagnosed the reasons that the plane had gone into a stall, and that their subsequent errors, based on this initial mistake, led directly to the catastrophe.

|

| English: Pitot tube (Photo credit: Wikipedia) |

Confusingly, at the height of the danger, a blaring alarm in the cockpit indicating the stall went silent — suggesting exactly the opposite of what was actually happening. The plane's cockpit voice recorder captured the pilots' last, bewildered exchange:

|

| Recife - The frigate Constituição arrives at the Port of Recife, transporting wreckage of the Air France Airbus A330 that was involved in an accident on 31 May 2009. (Photo credit: Wikipedia) |

(Pilot 2) But what's happening?

Less than two seconds later, they were dead.

Researchers find … circumstances where adding safety-enhancements to systems actually makes crisis situations more dangerous, not less so. The reasons are rooted partly in the pernicious nature of complexity, and partly in the way that human beings psychologically respond to risk.

We rightfully add safety systems to things … in an effort to encourage them to run safely yet ever-more efficiently. Each of these safety features, however, also increases the complexity of the whole. Add enough of them, and soon these otherwise beneficial features become potential sources of risk themselves, as the number of possible interactions — both anticipated and unanticipated — between various components becomes incomprehensibly large.

This, in turn, amplifies uncertainty when things go wrong, making crises harder to correct: … Imagine facing a dozen … alerts simultaneously, and having to decide what's true and false … at the same time. Imagine further that, if you choose incorrectly, you will push the system into an unrecoverable catastrophe. Now, give yourself just a few seconds to make the right choice. …

CalTech system scientist John Doyle has coined a term for such systems: … Robust-Yet-Fragile — and one of their hallmark features is that they are good at dealing with anticipated threats, but terrible at dealing with unanticipated ones. As the complexity of these systems grow, both the sources and severity of possible disruptions increases, even as the size required for potential 'triggering events' decreases — it can take only a tiny event, at the wrong place or at the wrong time, to spark a calamity.

Variations of such "complexity risk" contributed to JP Morgan's recent multibillion-dollar hedging fiasco, as well as to the challenge of rebooting the US economy in the wake of the 2008 financial crisis. (Some of the derivatives contracts that banks had previously signed with each other were up to a million pages long, … Untangling the resulting counterparty risk — determining who was on the hook to whom — was rendered all but impossible. This in turn made hoarding money, not lending it, the sanest thing for the banks to do after the crash.)

Complexity is a clear and present danger to both firms and the global financial system: it makes both much harder to manage, govern, audit, regulate and support effectively in times of crisis. Without taming complexity, greater transparency and fuller disclosures don't necessarily help, …: making lots of raw data available just makes a bigger pile of hay in which to try and find the needle.

Unfortunately, human beings' psychological responses to risk often makes the situation worse, through twin phenomena called risk compensation and risk homeostasis. … [As] we add safety features to a system, people will often change their behavior to act in a riskier way, betting (often subconsciously) that the system will be able to save them. People wearing seatbelts in cars with airbags and antilock brakes drive faster than those who don't, because they feel more protected … And we don't just adjust perceptions of our own safety, but of others' as well: for example, motorists have been found to pass more closely to bicyclists wearing helmets than those that don't, betting (incorrectly) that helmets make cyclists safer than they actually do.

A related concept, risk homeostasis, suggests that, … we each have an internal, preferred level of risk tolerance — if one path for expressing one's innate appetite for risk is blocked, we will find another. In skydiving, this phenomenon gave rise, famously, to Booth's Rule #2, which states that "The safer skydiving gear becomes, the more chances skydivers will take, in order to keep the fatality rate constant."

Organizations also have a measure of risk homeostasis, expressed through their culture.

People who are naturally more risk-averse or more risk tolerant than the culture of their organizations find themselves pressured, often covertly, to "get in line" or "get packing."

|

| Ridiculous Risk Aversion - Lawyer Bollocks! (Photo credit: Danny McL) |

|

| Deepwater Horizon Oil Spill Site (Photo credit: Green Fire Productions) |

Consider the problem of complexity and financial regulation. The elements of Dodd-Frank that have been written so far have drawn scorn … for doing little about the problem of too-big-to-fail banks; but they've done even less about the more serious problem of too-complex-to-manage institutions, …

Banks' advocates are quick to point out that many of the new regulations are contradictory, confusing and actually make things worse, …: adding too-complex regulation on top of a too-complex financial system could put us all, … in the cockpit of a doomed plane.

… [To} be explored here: to encourage the reduction in the complexity of both firms and the financial system as a whole, in exchange for reducing the number and complexity of regulations with which the banks have to comply. … Such a system would be easier to police and tougher to game.

Efforts at simplification also have to deal urgently with the problem of dense overconnection … In 2006, the Federal Reserve invited a group of researchers to study the connections between banks … What they discovered was shocking: Just sixty-six banks — out of thousands — accounted for 75 percent of all the transfers. And twenty five of these were completely interconnected to one another, …

Little has been done about this dense structural overconnection since the crash, … Over the past two decades, the links between financial hubs like London, New York and Hong Kong have grown at least sixfold. By reintroducing simplicity and modularity back into the system, a crisis somewhere doesn't always have to become a crisis everywhere.

… [Taking] steps to tame complexity of a system are meaningless without also addressing incentives and culture, since people will inevitably drive a safer car more dangerously. To tackle this, organizations must learn to improve the "cognitive diversity" of their people and teams — getting people to think more broadly and diversely about the systems they inhabit. One of the pioneers in this effort is, … the U.S. Army.

|

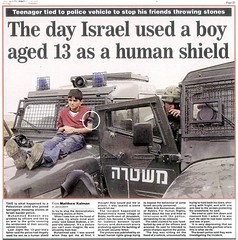

| human-shield-gaza (Photo credit: ` ³ok_qa³ `) |

Making one kind of mistake might get you killed, making another might prolong a war.

To combat this, retired army colonel Greg Fontenot and his colleagues at Fort Leavenworth, Kansas, started the University of Foreign Military and Cultural Studies, more commonly known by its nickname, Red Team University. The school is the hub of an effort to train … field operatives who bring critical thinking to the battlefield and help commanding officers avoid the perils of overconfidence, strategic brittleness, and groupthink. The goal is to respectfully help leaders in complex situations unearth untested assumptions, consider alternative interpretations and "think like the other" without sapping unit cohesion or morale, and while retaining their values.

|

| Flight path of Air France Flight 447 on 31 May/1 June. The solid red line shows actual route, the dashed line is the planned route after the last transmission heard. (Photo credit: Wikipedia) |

More than 300 of these professional skeptics have since graduated from the program, and have fanned out through the Army's ranks. Their effects have been transformational — not only shaping a broad array of decisions and tactics, but also spreading a form of cultural change appropriate for both the institution and the complex times in which it now both fights and keeps the peace.

Structural simplification and cultural change efforts like these will never eliminate every surprise, of course, but undertaken together they just might ensure greater resilience — for everyone — in their aftermath.

Otherwise, like the pilots of Flight 447, we're just flying blind.

More blog posts by Andrew Zolli

More on: Decision making, Risk management

No comments:

Post a Comment